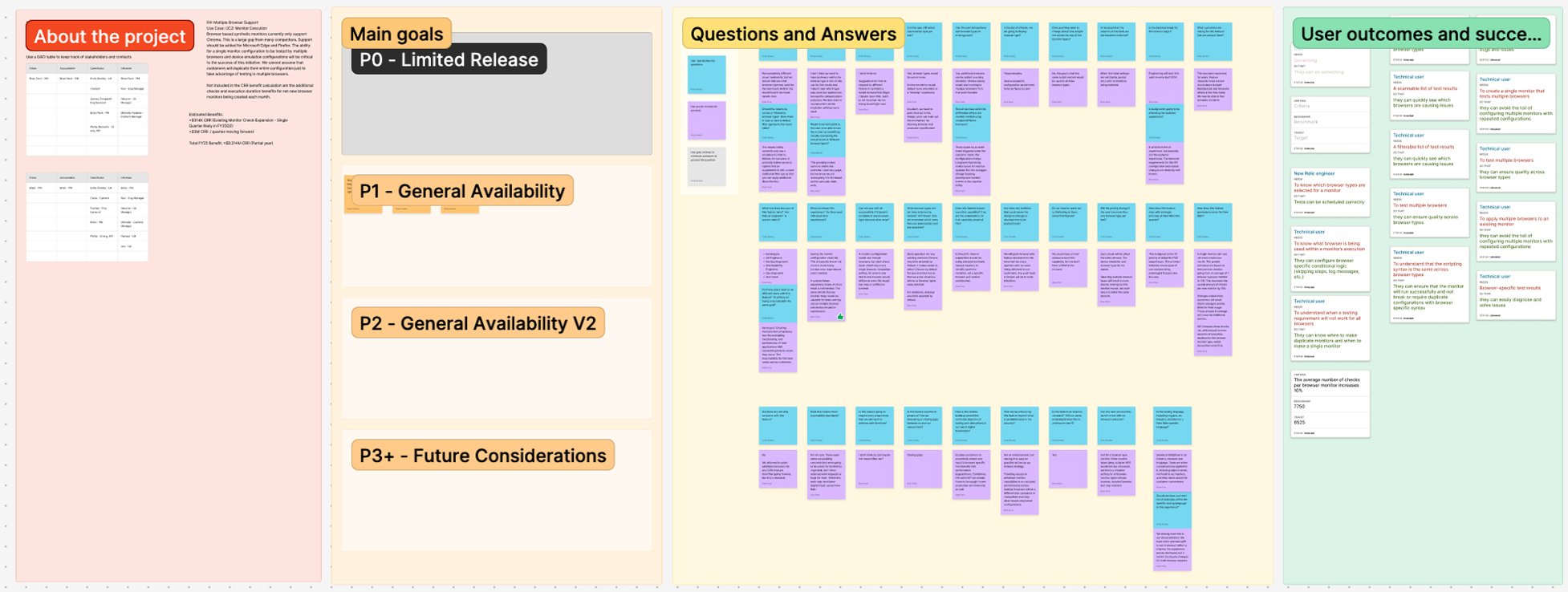

My user-centered design approach emphasized "jobs to be done" to ensure the solution addressed real-world tasks and outcomes for DevOps engineers, SREs, internal product teams, QA automation engineers, and release managers. This focus helped ensure the solution provided the necessary control, customization, traceability, and proactive regression detection needed by various stakeholders to ensure smooth, low-risk deployments. This approach was crucial in an enterprise technical design context where specific user needs are paramount.

The design process navigated several key constraints and considerations, including the wide variation in testing workflows across different teams, necessitating a flexible solution. A significant challenge was to achieve parity with competitors while seamlessly integrating CAT into New Relic’s existing pipeline. Furthermore, ensuring the traceability of test results back to their source, even across multiple services, was a critical requirement that informed design decisions

As the sole designer from discovery through launch, my iterative approach began with mapping current team testing methodologies, from fully automated CI/CD pipelines to manual regression testing. I collaborated extensively with engineering to define CAT's integration points within New Relic’s observability tools and worked through various information architecture models for presenting test results. Crucially, I validated flows with internal product teams to ensure ease of configuration and direct traceability of failures to code or deployment changes.

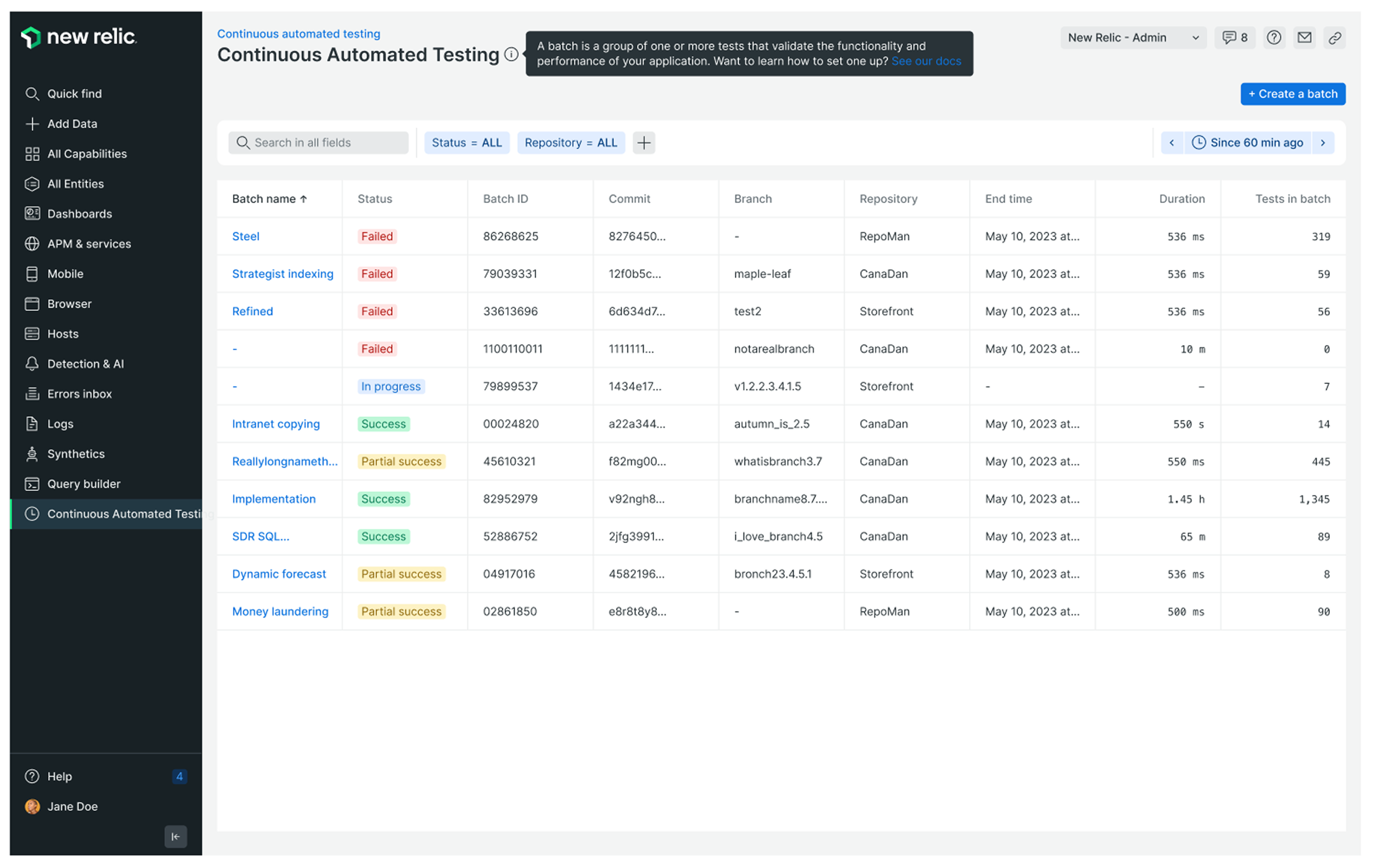

Through this process, we achieved several key design and product highlights, leading to tangible improvements:

• Enabled pre-production visibility for internal teams.

• Reduced test setup time.

• Clarified root cause through intuitive UI and metadata.

• Designed a robust alerting structure to present the most critical, often failing, results upfront.

• Linked failed tests directly to specific deployment changes or configuration updates to vastly improve traceability.

• Developed configuration flows that fit naturally into CI/CD pipelines, minimizing the need for tool-switching.

• Successfully onboarded 4 internal teams during the initial phase.

This project was my first experience with a feature that was technically net-new, but very tightly coupled with an existing product (Synthetic Monitoring). It presented unique challenges, including the need to scale token logic and component models for flexible assertions, introduce new layouts without breaking consistency, and navigate rapid iteration while preserving accessibility and clarity. The project also taught me the importance of strategic reuse; I laid the foundation for future CAT-Synthetic Monitoring integration by intentionally designing modular pieces that could bridge both products. This was a period of strategic work under pressure, significantly shaping my approach to fast-moving, cross-surface UX systems.